Finding duplicate files is a hectic task when you have millions of files spread all over your computer. To check if two files are duplicates of each other, we should do a one-to-one check for the suspect files. Even though this is simple for smaller files, performing a one-to-one (byte-to-byte) comparison for large files is a massively time-consuming task.

Imagine comparing two gigantic files to check whether they are duplicates, the time and the effort you need to spare for undertaking such a task is probably enough to simply make you give up. An easy way to remove duplicates would be to utilize an all-purpose duplicate cleaner such as the Clone Files Checker. It works on the basis of highly advanced algorithms and saves your time in organizing files.

Clone Files Checker for Windows and Mac is a verified software

Compatible with Windows 10/ 8.1/ 8/ 7 and Mac OS 10.10 onwards

By clicking to download, you agree to these Terms

See Windows Features | Mac Features

Let’s imagine that we are downloading a large file over the Internet and we need to make sure that the file was not modified by a third-party in the midst of the transmission, which may cause a man-in-the-middle attack. Checking each byte over the Internet would take years to accomplish and also eat up a significant portion of your Internet bandwidth.

So, how can we easily compare two files in such manner that is credible yet not at all laborious?

There are multiple ways to do so, including Hash Comparison which is turning out to be a highly popular and reliable.

What is a hash?

Hash is a mathematical function which takes objects as inputs and produces as output a number or a string.

These input objects can vary from numbers and strings (text) to large byte streams (files or network data). The output is usually known as the hash of the input.

The main properties of a hash function are:

- Easy to compute

- Relatively small output

Example

We can define a hash function to get the hash of the numbers between 0 to 9999.

h(x) = x mod 100

In the above-mentioned hash function, you can see that there is a significant probability of getting the same hash (collision) for two different inputs. (i.e. 1 and 1001).

So, it is always good to have a better hash function with fewer collisions, which makes it difficult to find two inputs which give the same output.

While hashing is used in many applications such as hash-tables (data structure), compression and encryption, it is also used to generate checksums of files, network data and many other input types.

Checksum

The checksum is a small sized datum, generated by applying a hash function on a large chunk of data. This hash function should have a minimum rate of collisions such that the hashes for different inputs are almost unique. That means, getting the same hash for different inputs is nearly impossible in practice.

These checksums are used to verify if a segment of data has been modified. The checksum (from a known hash function) of received data can be compared with the checksum provided by the sender to verify the purity of the segment. That is how all the data is verified in TCP/IP protocols.

In this way, if we generate two checksums for two files, we can declare that the two files aren’t duplicates if the checksums are different. If the checksums are equal, we can claim that the files are identical, considering the fact that getting the same hash for two different files is almost impossible.

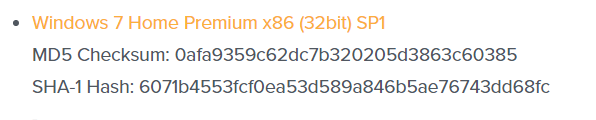

And many websites provide hashes of the files at the download pages, especially when the files are located on different servers. In such a scenario, the user can verify the authenticity of a file by comparing the provided hash with the one he generated using the downloaded file.

There are various hashing functions that are used to generate checksums. Here are some popular ones.

| Name | Output size |

| MD5 | 128bits |

| SHA-1 | 160bits |

| SHA-256 | 256bits |

| SHA-512 | 512bits |

MD5

MD5 is a popular hashing function which was initially used for cryptographic purposes. Even though this generates 128-bit output, many people no longer use it due to a host of vulnerabilities that later surfaced. However, it still can be used as a checksum to verify the authenticity of a file (or a data segment) to detect unintentional changes/corruptions.

SHA-1

SHA 1 (Secure Hash Algorithm 1) is a cryptographic hashing function which generates a 160-bit output. This is no longer considered as secure after many attacks, web browsers already have stopped accepting SLL Certificates based on SHA-1.

The later versions of SHA checksums (SHA-256 and SHA-512) are more secure as they incorporate vast improvements and hence don’t contain any vulnerabilities as oft he time this article was published.

Features of a strong hash function

- Should be open – Everybody should know how the hashing is performed

- Easy to generate – Should not take too much time to generate the output

- Fewer collisions – The probability of getting the same hash for two inputs should be near to zero

- Hard to back-trace – Given a hash, recovering the original input should not be possible

Warning: Undefined array key "author_box_bio_source" in /home1/clonefil/public_html/blog/wp-content/plugins/molongui-authorship/views/author-box/parts/html-bio.php on line 2

Raza Ali Kazmi works as an editor and technology content writer at Sorcim Technologies (Pvt) Ltd. He loves to pen down articles on a wide array of technology related topics and has also been diligently testing software solutions on Windows & Mac platforms. If you have any question about the content, you can message me or the company's support team.

Pingback: Windows File Recovery Tool Review | Clone Files Checker Blog

Pingback: How to Use Windows MD5 Checker and Delete Identical Files? | Clone Files Checker Blog